Rock climbing is a physically and mentally demanding sport, it test the limits of one’s strength, endurance, agility, balance and concentration. Sasha DiGiulian is one of the best rock climbers in the world. I can’t get past 15 feet without starting to panic and freak out. Maybe it’s because I’m afraid of heights…and overweight, but I’m just not right for that kind of challenge.

Like rock climbing, scaling a database is a challenge. That’s one of the driving forces of the NOSQL movement, so when it comes to your database you’ll want to make sure you are making the right choices. Scaling up vs scaling out is a divisive argument. Back in 2009 Markus Frind (of Plenty of Fish) bucked the scale out trend and showed off his $100k+, 512GB RAM Database Server. Jeff Atwood (of Stack Overflow) offered a different view and some cost comparisons. That view wasn’t shared by David Heinemeier Hanson (of 37 Signals) when they thanked the hardware guys for letting them keep things simple and punt on sharding their database.

I ran into a tweet by Matt Stancliff the other day on how low the cost of 1TB RAM servers was now a days:

https://twitter.com/mattsta/status/402514057779249152

I didn’t doubt it, but where could one get such beasts?

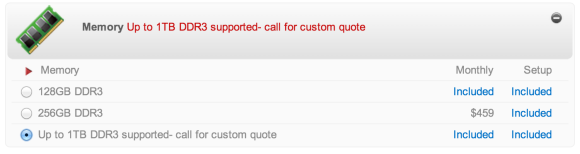

At $31,141 or about $1000/month lease, we can get close at thinkmate.com but not quite. A little more digging however and…

Let me zoom in on that a little bit…

But I don’t have 30k you say? Well, how about lease them for about $1k a month for 36 months? Your start-up may not be around 36 months from now? Not with that kind of pessimism it won’t. Ok, how about we just get one hosted for us?

No, really call them for a quote, Hivelocity will probably give you a number between $2500 and $3000 a month. If you can manage your own servers try WebNX, they’ll come in under $2k if you ask nicely. Even Amazon is getting in on the High RAM action. The cr1.8xlarge models come with 244GB of RAM for around $2500 a month.

So consider this a public service announcement. When it comes to Databases, the more RAM the better. You’ll get the most performance out of your Neo4j instance if your graph can fit in both caches. First the File buffer cache, take a look at these 5 files in your graph.db directory:

ls -alh /neo4j/data/graph.db/neostore*

-rw-r--r-- 1 neo4j neo4j 149M Nov 11 16:39 neostore.nodestore.db

-rw-r--r-- 1 neo4j neo4j 2.2G Nov 11 16:39 neostore.propertystore.db

-rw-r--r-- 1 neo4j neo4j 128 Nov 11 16:14 neostore.propertystore.db.arrays

-rw-r--r-- 1 neo4j neo4j 3.0G Nov 11 16:39 neostore.propertystore.db.strings

-rw-r--r-- 1 neo4j neo4j 687M Nov 11 16:39 neostore.relationshipstore.db

…and make sure the MMIO settings are at or just a little bigger than they are:

neostore.nodestore.db.mapped_memory=200M

neostore.propertystore.db.mapped_memory=2300M

neostore.propertystore.db.arrays.mapped_memory=5M

neostore.propertystore.db.strings.mapped_memory=3200M

neostore.relationshipstore.db.mapped_memory=800M

Next the object cache which is configured differently. In memory, the nodes have pointers to all the relationships that connect them to the graph, so even if your node size is small you’ll want to take into account the number of relationships each has as well as properties for both when sizing them. Here is an example of what it could look like in a 24GB RAM Server:

cache_type=gcr

node_cache_array_fraction=10

relationship_cache_array_fraction=10

node_cache_size=4000M

relationship_cache_size=2500M

Also remember that the GCR cache lives inside your JVM’s Heap, so take that into account when sizing it. In the neo4j-wrapper.conf file you’ll want to edit these settings to include your working memory plus the object cache.

# Initial Java Heap Size (in MB)

wrapper.java.initmemory=10240

# Maximum Java Heap Size (in MB)

wrapper.java.maxmemory=10240

The GCR cache is only available in the Enterprise edition, but you’ll find it superior to the other caches under high load situations. Once your database is configured right for one server, you can scale out to a cluster of them to get more performance if your application requires it. Once again clustering is available only in the Enterprise edition but recently introduced pricing makes it quite affordable to start-ups and enterprises alike. Oh… and if you have a little extra money, consider putting SSD drives on these servers as well. You won’t regret it. As always, test, test, test, test your configurations and find the right one for your application.

[…] by the number of relationships traversed in each query. I’ve already shown you how you can Scale UP, if you need more throughput, then a cluster of Neo4j instances can deliver it by scaling out. The […]

[…] easy answer is to Scale Up. However, trying to add more cores to my Apple laptop doesn’t sound like a good time. Another […]

[…] over http using Undertow and get to about 8000 requests per second. If we needed more speed we can scale up the server or we can scale out to multiple servers by switching out the GraphDatabaseFactory and […]